10.07.2019

This article originally appeared in the June 10, 2019 issue of SpaceNews magazine.

To prepare for self-driving cars, mobility companies have spent more than a decade gathering data from thousands of vehicles equipped with cameras, radar, lidar, GPS receivers and inertial measurement units. No comparable database exists for companies or government agencies aiming to give spacecraft more autonomy.

“We don’t have the plethora of data or sensors,” Franklin Tanner, Raytheon machine learning and computer vision engineer, said in May at the Space Tech Expo in Pasadena, California. “We can simulate a lot of activity and do modeling but do not have as much of the real-world data.”

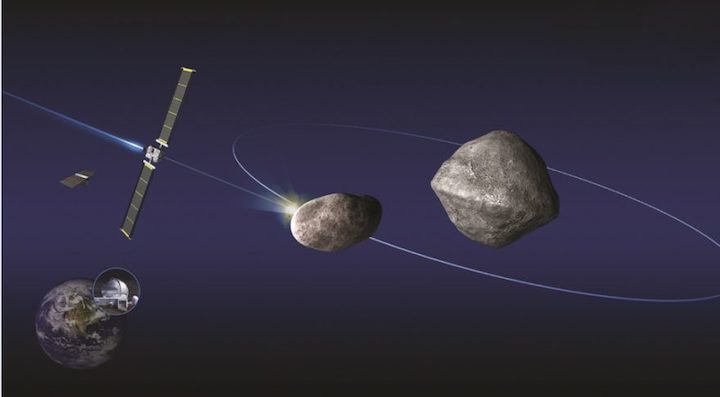

Spacecraft operators will acquire some of the data they need to create and train machine learning algorithms through projects like Double Asteroid Redirection Test (DART), a NASA mission to send a probe in 2021 to Didymos, a binary asteroid system. DART’s primary mission is slamming a probe into the moon Didymos B to measure the change in its orbit around Didymos A. A secondary goal of DART is testing artificial intelligence for close approach, rendezvous and docking, said Roberto Carlino, NASA Ames Research Center hardware and software test engineer for Astrobee, free-flying robots on the International Space Station.

“There are a lot of players that want to implement AI as at least a secondary part of the mission to begin gathering data,” Carlino said.

Once companies or government agencies begin to signal their interest in acquiring high-fidelity data to train machine learning algorithms for spacecraft, more data are likely to become available. “People will actively try to create that data because there will be value to it,” said Blaine Levedahl, U.S. government programs director for Olis Robotics, a Seattle company that relies on AI to offer varying degrees of autonomy for mobile robotic systems. A similar market is spurring companies to gather and sell training data for ground-based AI systems, he added.

Until a plethora of space data is available, spacecraft operators are considering incremental moves toward autonomy because they see significant benefits.

For national security space, “we want very resilient assets on orbit that are adaptable and responsive to changing threats,” said Christine Stevens, Aerospace Corp. principal engineer for space programs operations. Using AI, a spacecraft may be able to recognize a threat, learn from it and counteract it or take evasive action. Or, a single satellite in a constellation could learn something and propagate its newly acquired knowledge to other satellites in the constellation, Stevens said.

However, spacecraft operators will only embrace AI when they have confidence it will not create new problems.

“When the system sees something it has never seen before, how can we trust that it is not going to do something that would endanger the spacecraft or do a maneuver that will cause problems?” Tanner asked. “Understanding the black box is vitally important in our applications.”

AI applications are sometimes referred to ask black boxes because they propose action or solutions but cannot explain their reasoning. That makes people hesitant to trust them with important decisions.

Spacecraft operators could gain trust in AI through traditional verification and validation activities. Repeatedly verifying that the end product meets its objectives is one possible approach, Stevens said.

Olis Robotics recommends keeping the human operator in the loop. “Most of the time, fully autonomous operation is not possible,” Levedahl said. Plus, developing fully autonomous systems would be extremely expensive. “Is it worth the cost?” he asked. “Or is it better to keep the operator in the loop and increase the autonomy while fielding systems?”

Stevens suggested the industry consider breaking the problem into bite-size pieces. “Perhaps there’s a way to gain confidence in how the system learns and makes decisions,” she said.

One possible approach would be to design and test individual AI modules or containers. Once modules are deemed trustworthy, they could be combined. “Maybe there’s a way to do mission assurance in bite-size pieces and aggregate those together,” Stevens said.

Whatever methods the industry selects to build confidence, it should deliver AI applications that expand the capabilities of next-generation space systems without exaggerating potential benefits, Tanner said.

“We’ve gone through the AI winter and probable through the AI spring,” Tanner said. “We are in the AI summer now, fielding AI and autonomous applications that are making an impact in my field: national security.”

Still, the industry must be vigilant about delivering on AI’s promise without exaggerating its potential benefits, Tanner said.

“If we don’t deliver on some of the promises with system that are trustworthy, resilient and accomplish the intended goals, we could soon go into another AI winter,” he added.

Quelle: SN