9.12.2020

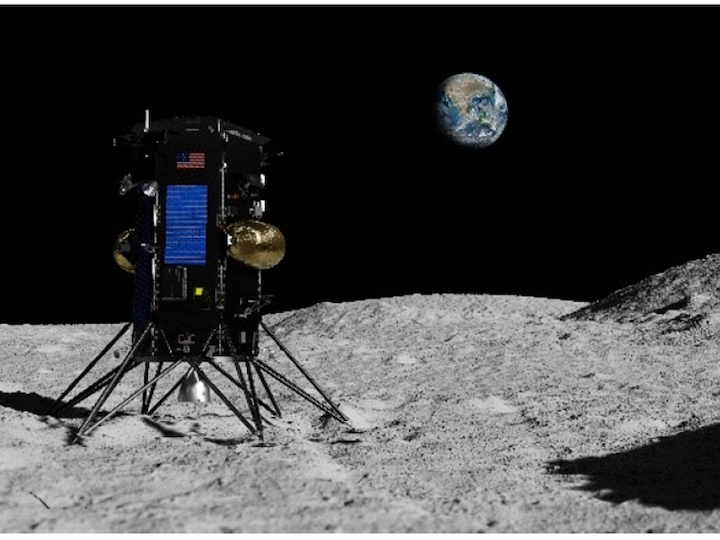

IM-1 set to land on the Moon in the fourth quarter of 2021. Image Credit: Intuitive Machines

In order for future lunar exploration missions to be successful and land more precisely, engineers must equip spacecraft with technologies that allow them to “see” where they are and travel to where they need to be. Finding specific locations amid the moon’s complicated topography is not a simple task.

In research recently published in the AIAA Journal of Spacecraft and Rockets, a multidisciplinary team of engineers demonstrated how a series of lunar images can be used to infer the direction that a spacecraft is moving. This technique, sometimes called visual odometry, allows navigation information to be gathered even when a good map isn’t available. The goal is to allow spacecraft to more accurately target and land at a specific location on the moon without requiring a complete map of its surface.

“The issue is really precision landing,” said John Christian, an associate professor of aerospace engineering at Rensselaer Polytechnic Institute and first author on the paper. “There’s been a big drive to make the landing footprint smaller so we can go closer to places of either scientific interest or interest for future human exploration.”

In this research, Christian was joined by researchers from Utah State University and Intuitive Machines, LLC (IM) in Houston, Texas. NASA has awarded IM multiple task orders under the agency’s Commercial Lunar Payload Services (CLPS) initiative. IM’s inaugural IM-1 mission will deliver six CLPS payloads and six commercial payloads to Oceanus Procellarum in the fourth quarter of 2021. Their IM-2 commercial mission will deliver a NASA drill and other payloads to the lunar south pole in the fourth quarter of 2022.

“The interdisciplinary industry/academia team follows in the footsteps of the NASA Autonomous Hazard Avoidance and Landing Technology (ALHAT) project which was a groundbreaking multi-center NASA/industry/academia effort for precision landing,” said Timothy Crain, the Vice President of Research and Development at IM. “Using the ALHAT paradigm and technologies as a starting point, we identified a map-free visual odometry technology as being a game-changer for safe and affordable precision landing.”

In this paper, the researchers demonstrated how, with a sequence of images, they can determine the direction a spacecraft is moving. Those direction-of-motion measurements, combined with data from other spacecraft sensors and information that scientists already know about the moon’s orientation, can be substituted into a series of mathematical relationships to help the spacecraft navigate.

“This is information that we can feed into a computer, again in concert with other measurements, that all gets put together in a way that tells the spacecraft where it is, where’s it’s going, how fast it’s going, and what direction it’s pointed,” Christian said.

About Intuitive Machines

Intuitive Machines is a premier provider and supplier of space products and services that enable sustained robotic and human exploration to the Moon, Mars and beyond. We drive markets with competitive world-class offerings synonymous with innovation, high quality, and precision. Whether leveraging state-of-the-art engineering tools and practices or integrating research and advanced technologies, our solutions are insightful and have a positive impact on the world.

Quelle: Rensselaer Polytechnic Institute (RPI)